MiddleBit: the roundabout at the intersection of art and technology

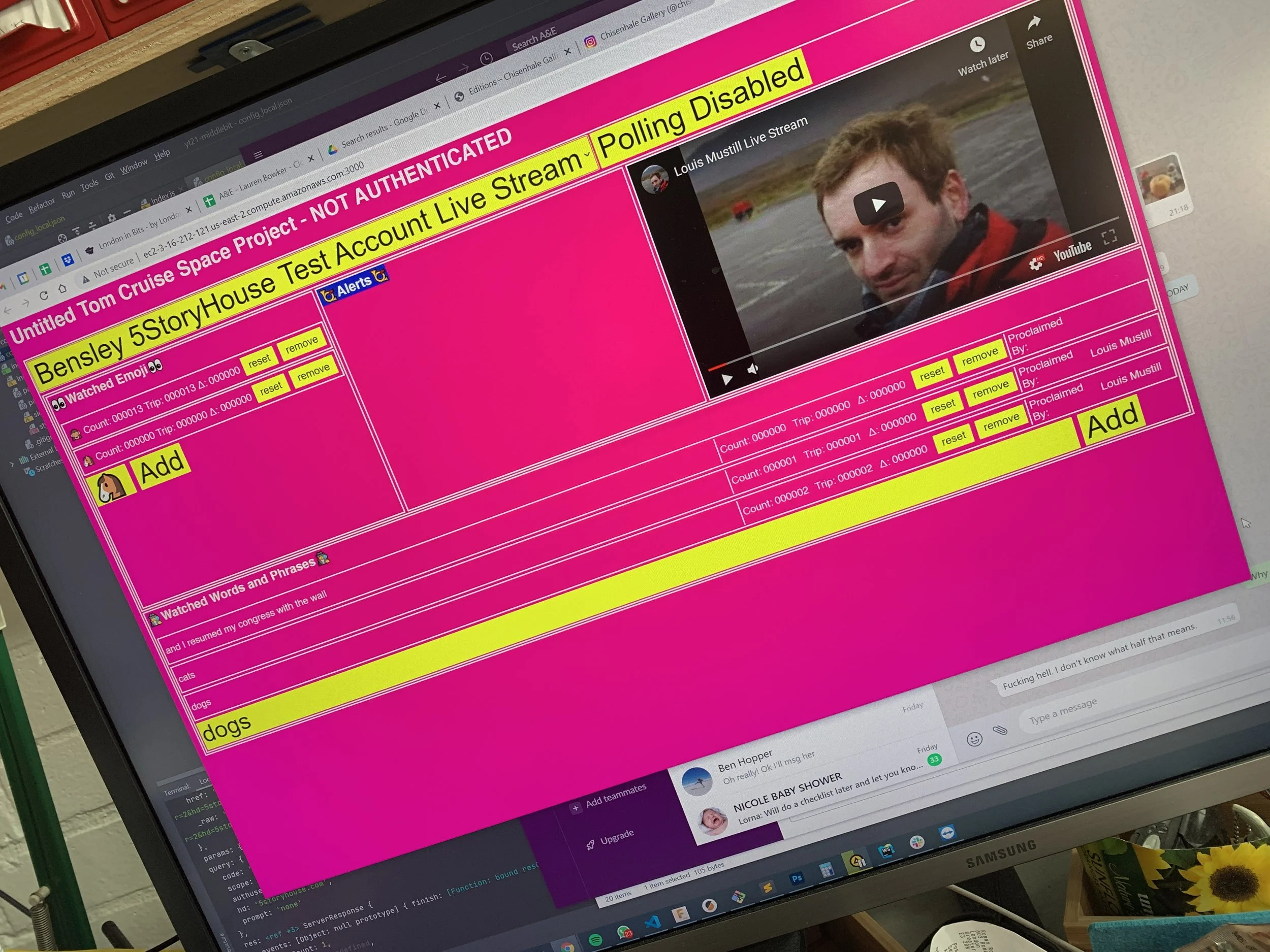

MiddleBit is our control, sensing and process control toolkit. Born in 2018 as the show control system for a Proustian escape room as allegory for the perils of consumption, it has since flown a laser-carrying robot above a rave, managed instrumentation in a solar powered human scale animal burrow, controlled the lighting in architectural models, interpreted sensor data for an interactive archery experience, captured the voices and hopes of a lot of people, pulled the balls of futuristic space bingo, aggregated realtime interaction data from thousands of users, mixed personalised blends of whiskey, and supervised machine learning pipelines for object classification.

So named because it is the Bit in the Middle, it can talk and listen to anything* in any language**, be deployed locally or in the cloud (or both), runs on any operating system, generates detailed logs and reports, can supervise any process or workflow, and only ever did the wrong thing once.

MiddleBit is optimised for fast development and iteration. Most MiddleBit solutions are developed in less than two days.

MiddleBit is usually, but not always, pink.

*Systems, APIs, and tools we’ve interacted with: Twilio, YouTube/Google, Pusher, Ableton, Q-Lab, Velocio, d3, GrandMA, Avolites, Vicon, OptiTrack, Adobe Creative Suite, AWS CloudFormation, Roomba, and a lot of things we built ourselves.

**Protocols/transports we've used: raw TCP/UDP, WebSockets, OSC, ArtNet, MIDI, sACN, serial, WS2812, ModBus, 0-10V, DALI, DMX, NatNet, Vicon Control, HP-GL, GCode, X-Bee, Gig-E Vision, Roomba. Protocols are implemented as and when we need them.